In a previous post, Add Search to Hugo Sites With Azure Search, I explained how I added a search capability to my site using Azure Search. In this post, I’ll show you how I trigger Azure Search to reindex the site each time it’s redeployed as part of my existing Azure Pipeline configuration.

As I explained in my post Automated Hugo Releases with Azure Pipelines, I use Azure YAML Pipelines to deploy my site. What I wanted to do what to make sure that whenever the site was updated, Azure Search would reindex the site.

What I did was set up an additional stage with a single deployment job in my pipeline for the live site. Whenever a deployment succeeds, I want to not only tell Azure Search to reindex the site, but I also want to purge the search results page as well as the search index data file on my site from the CDN.

This involved two steps:

- Create a service principal (Microsoft Entra ID application) that will be used to make the changes in Azure

- Update the Azure pipeline

Create Microsoft Entra ID service principal

As I said above, I want to do two things in Azure: purge the CDN and re-run the index. I wanted to do both of these things with the Azure CLI, but it’s support for Azure Search is quite limited at this time, so I was stuck using their REST API.

However, I can use the CLI for purging the CDN. I’d prefer to have an agent do this rather than a user, so I created a service principal that was granted permission to do this.

To do this, create a new Microsoft Entra ID application in your tenant from within the Azure portal. You don’t need to grant it any permissions, but you do need to create a client secret.

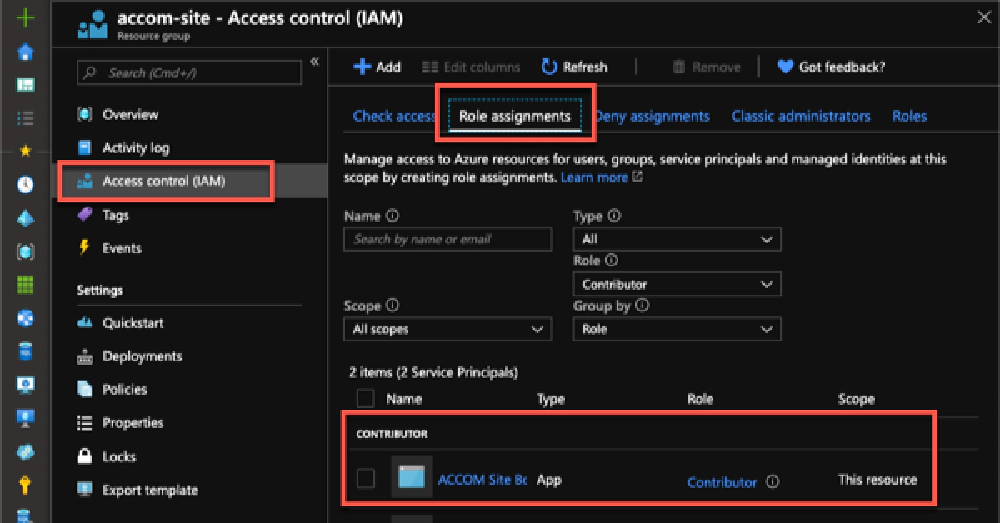

Once you have the app created, head over to your resource group or CDN, whichever level you want to give this app access to, and select the Access control (IAM) menu item:

Azure Resource Group IAM

Here I’ve granted the app the contributor role to the entire resource group. You can limit this further if you wish, for example I could have just granted this the role CDN Endpoint Contributor on the resource group or the actual CDN endpoint, but I use this for other things that are beyond the scope of this article.

With an app created, now I can update the pipeline.

Update Azure Pipeline

While you’re still in the Azure portal, jump over to the Azure Search instance you created, head to the Keys page and copy one of the admin keys. You’ll need this to call the Search REST API.

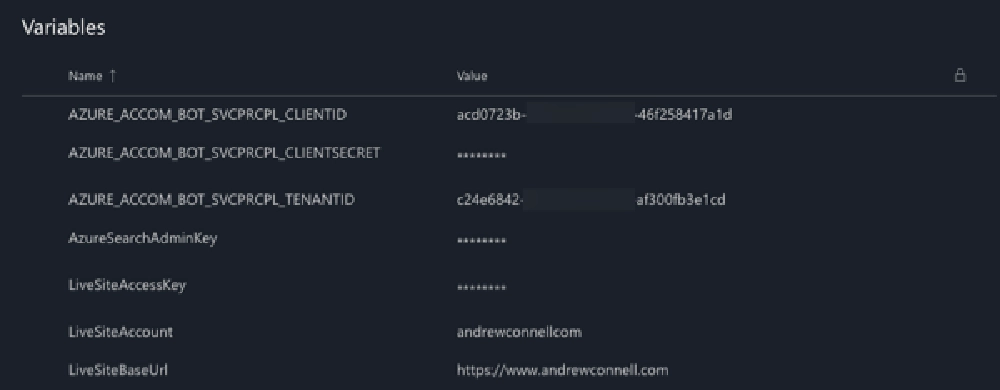

Now, head over to your Azure Pipeline. I first updated my variable group to include details for the new service principal and the Azure Search admin key:

Azure DevOps Variable Group

The last step is to update the pipeline. Here’s what it looks like:

- stage: live_site_post_deploy

displayName: Live site post deployment

dependsOn:

- live_site_build_deploy

# always build on pushes to master

condition: and(succeeded(), eq(variables['Build.SourceBranch'], 'refs/heads/master'))

jobs:

- deployment: exec_search_reindex

displayName: Reindex Azure Search

pool:

vmImage: ubuntu-latest

environment: azure_accom_live_site

strategy:

runOnce:

deploy:

steps:

######################################################################

# sign in to Azure CLI with service principal

######################################################################

- script: az login --service-principal --tenant $TENANTID

--username $CLIENTID

--password $CLIENTSECRET

displayName: Login to Azure CLI

env:

CLIENTID: $(AZURE_ACCOM_BOT_SVCPRCPL_CLIENTID)

CLIENTSECRET: $(AZURE_ACCOM_BOT_SVCPRCPL_CLIENTSECRET)

TENANTID: $(AZURE_ACCOM_BOT_SVCPRCPL_TENANTID)

######################################################################

# Purge search index & search page(s) from CDN

######################################################################

- script: az cdn endpoint purge --resource-group accom-site

--profile-name cdn-acccom

--name cdn-acccom

--content-paths '/azureindex/feed.json'

'/search'

'/search/index.html'

displayName: Purge search assets from CDN

######################################################################

# Use Azure REST API to invoke search indexer

######################################################################

- script: "curl -H 'api-key:$AZURE_SEARCH_ADMINKEY'

-H 'content-length:0'

-X POST 'https://accom-site.search.windows.net/indexers

/accom-live-indexer/run?api-version=2019-05-06'"

displayName: Run Azure Search Indexer

env:

AZURE_SEARCH_ADMINKEY: $(AzureSearchAdminKey)

Let me explain what this does:

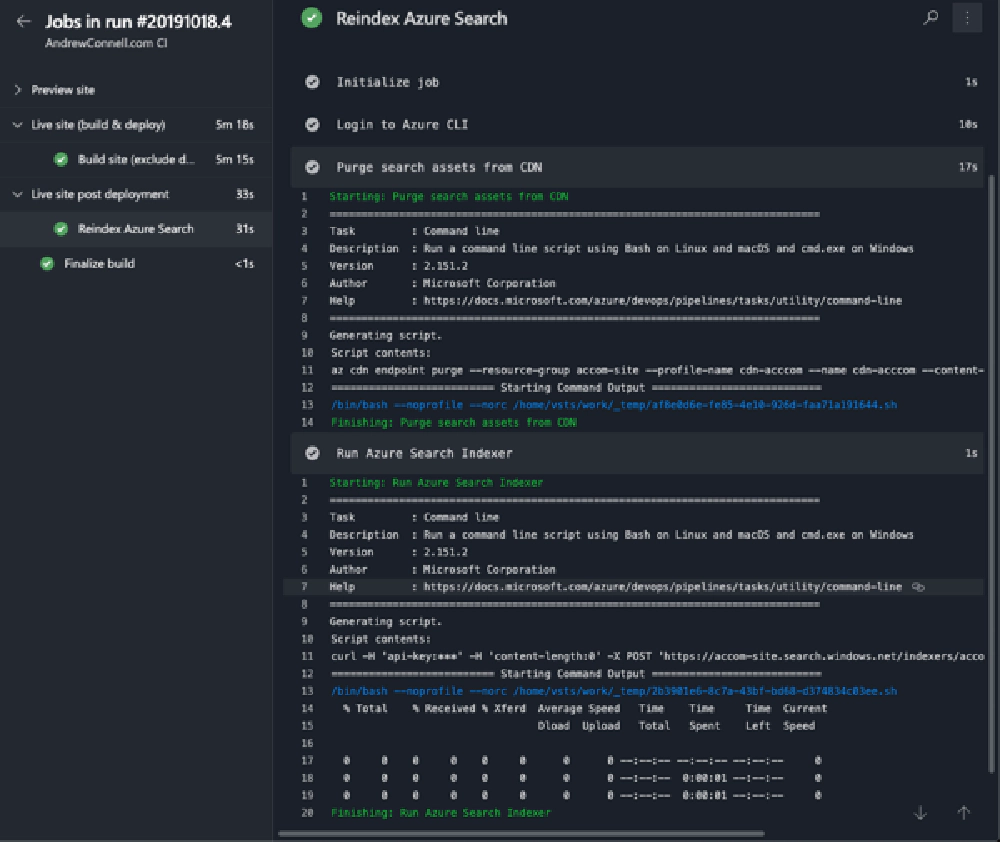

- Create a new stage: I first create a new stage

live_site_post_deploythat depends on the stagelive_site_build_deploy. This is done as I only want to reindex the site when the deployment succeeds. In this set up I also add a condition so that this only runs when a build is triggered on themasterbranch. Technically, this isn’t necessary as thelive_site_build_deployonly runs on master as well which would control it. - Step: Login to Azure CLI: The first step in this deployment job is to login to the Azure CLI. This is where I’m using my service principal that I created. By injecting the IDs and client secrets in as environment variables, they won’t get written to the pipeline execution or diagnostic logs.

- Step: Purge CDN: I’m using an Azure CDN on my site for optimal performance. When the site gets updated, I want to make sure the search page is purged from the CDN as well as that JSON file that Azure Search uses to index the site.

- Step: Reindex the site: The last step is to reindex the site. As I said above, the Azure CLI doesn’t support this at the time of writing, so I’m using their management REST API. Here you just need to make sure you use the correct URL for your endpoint, using the name of your search instance and the name of the search indexer you created. When you execute the

runfunction, it will tell the search indexer to re-index the site.

Azure Pipeline Results

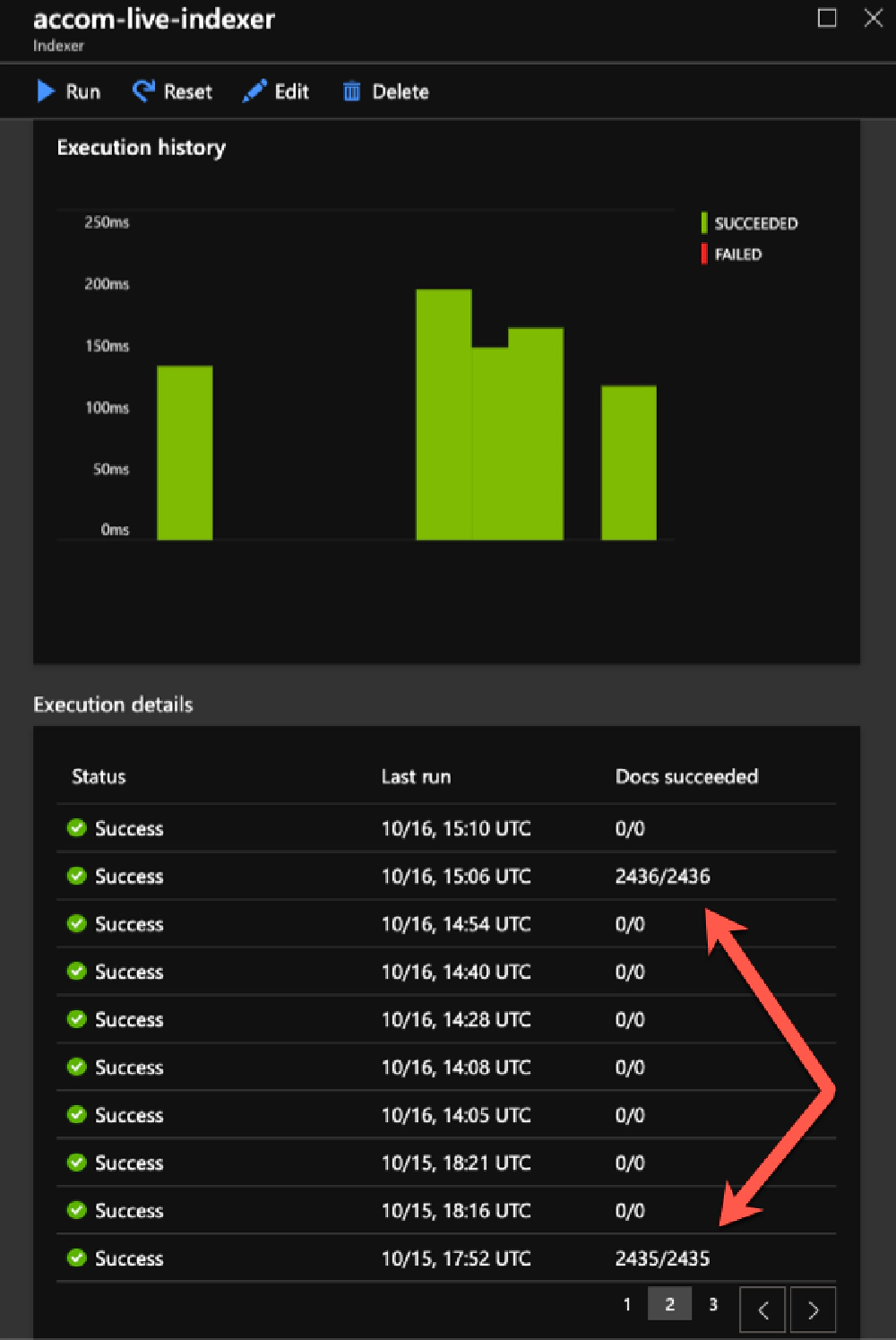

It may seem a bit strange when yo look at your indexer results as it shows 0/0 docs were indexed, but that’s only when the indexer doesn’t see a change to the file. When you actually add content to the site, it will pickup those files.

Azure Indexer Results

For instance, with my Azure pipeline set up, I automatically rebuild & deploy the site a few times a day picking up any content that I wrote that I wanted published at a later time. If nothing changed, then there was nothing new to get indexed. But as you can see from the indexer history above, When I added one new blog post, it picked it up.